Touchy-Feely with DOM Events: Rethinking Cross-Device User Interaction

This article was originally written for Nokia Code Blog on the earlier this month, and I am re-posting it here with a permission.

There have been numerous ways for users to interact with web pages on mobile phones. Historically, users navigated the mobile web by pressing physical buttons (arrow keys, soft keys, etc.), while some devices required a stylus.

In last several years, devices with touch-enabled screens have been adopted at such a rapid rate that the touch interaction has become ubiquitous. Now we have tablets that take input not only from touch, but from keyboards and mousepads using optional peripherals.

So what does it mean to you as a web developer? It means you need to detect the correct user input method, and design the correct user experience into your web apps.

In this article, I will explain the state of touch APIs in terms of current web standards, and show you some sample code to demonstrate.

Touch Events V.1

The touch interface was widely popularized when Apple’s iPhone came out, and the DOM touch event was also defined and implemented first on iOS Safari by Apple. Since then, it has been adopted by other browser vendors like Google and Mozilla, as well as web developers as the de-facto standard.

W3C defined the touch events specification as a set of low-level events that represent one or more points of contact with a touch-sensitive surface, as well as changes to those points with respect to the surface and any DOM elements displayed on or associated with it.

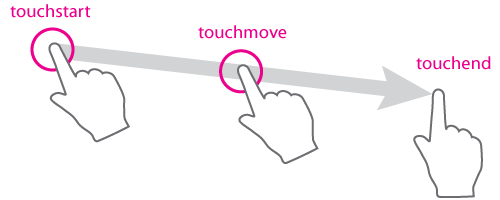

The event types include: touchstart, touchend, touchmove, touchenter, touchleave and touchcancel.

Example

The following code snippet, used in this example demo, determines the position (in pixel coordinates) of a finger within a canvas element when a touch action is initiated by the user.

The demo tracks a user’s touch points, allowing the user to draw on a kitty cat (in <canvas>). It will only work on browsers that supports touch events on touch-enabled devices. If you are trying it on a desktop using a mouse or trackpad, you’ll notice that nothing happens on screen.

var canvas = document.getElementById('drawCanvas');

canvas.addEventListener('touchstart', function(e) {

e.preventDefault(); // canceling default behavior such as scrolling

var x = e.targetTouches[0].pageX - canvas.offsetLeft;

var y = e.targetTouches[0].pageY - canvas.offsetTop;

}, false);

U Can’t Touch This

So how do you make it work on the desktop when touch events do not fire with a non-touch user input? To support mouse or trackpad events, you need to use the DOM MouseEvents.

canvas.addEventListener('mousedown', function(e) {

var x = e.offsetX || e.layerX - canvas.offsetLeft;

var y = e.offsetY || e.layerY - canvas.offsetTop;

}, false);

So I wrote another demo to demonstrate this.

This demo uses mouse events instead of the touch events, but not both types of events. Try it on a desktop browser and see how it works, and then see how it fails on touch-enabled devices.

To make both user input methods work, you need to support both touch and mouse events together.

When using touchstart, touchmove, and touchend, you also need to implement using mousedown, mousemove and mouseup respectively.

The completed demo is at the end of this article.

Pointer Events

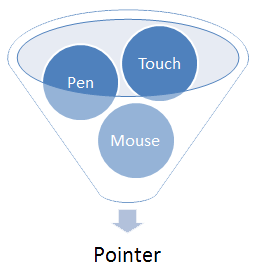

Now you probably wish there was a single set of events to handle both the iOS touch event model and the standard mouse events. Well, it turns out there really is a such thing.

Microsoft has been working on something called MSPointers to support multi user-inputs, and they submitted it to the W3C for standardization last year. Now it has reached the Last Call. This new and alternative standard event model is called Pointer Events, and it is designed to handle hardware-agnostic pointer input from devices like a mouse, pen, or touchscreen.

Besides Microsoft, Nokia, Google and Mozilla, are among the industry members working to solve this problem in the W3C Pointer Events Working Group.

This feature has been implemented already in Internet Explorer 10, and has been prototyped in the experimental version of Chromium.

The simple example below gets the x and y pointer coordinates using the pointer events. You will probably notice that the code looks similar to mouse events, which you may find less cumbersome compared with the touch events v1.

canvas.addEventListener('pointerdown', function(e) {

var x = e.offsetX || e.layerX - canvas.offsetLeft;

var y = e.offsetY || e.layerY - canvas.offsetTop;

}, false);

To support Internet Explorer 10 you need to use the prefixed version, so pointerdown, pointermove and pointerup event handler event types should be written as MSPointerDown, MSPointerMove and MSPointerUP respectively.

So try this demo on IE10 on desktop and/or Windows Phone 8.

I <3 You All

As I mentioned earlier, at this moment the only available browsers that support the Pointer Events are IE10 and a prototype build of Chromium, so it’s a good idea to include iOS touch events and mouse events until other browsers catch up.

var downEvent = isTouchSupported ? 'touchstart' : (isPointerSupported ? 'MSPointerDown' : 'mousedown');

canvas.addEventListener(downEvent, function(e) {

var x = isTouchSupported ? (e.targetTouches[0].pageX - canvas.offsetLeft) : (e.offsetX || e.layerX - canvas.offsetLeft);

var y = isTouchSupported ? (e.targetTouches[0].pageY - canvas.offsetTop) : (e.offsetY || e.layerY - canvas.offsetTop);

}, false);

I have a demo that supports all the methods I’ve mentioned in the article.

Try this on any modern browser, and it should work! The entire code is in this gist for you to take a look at.

Learn More

- W3C: Touch Events version 1

- MDN: Touch events

- W3C: Document Object Model (DOM) Level 3 Events Specification – Mouse Event Types

- W3C: Pointer Events

- MSDN: Pointer and gesture events

comments powered by